Project Portfolio

A collection of some of my projects

Overview

Before beginning, let's talk about the elephant in the room: NDA. As you probably already know, industry projects are protected by the NDA that I signed with the company in question, hence they will not be listed here on this page. If you'd like to know about them, have a look at my professional overview on LinkedIn or drop me an email. The projects that I list here will be either educational projects done at Purdue or Carnegie Mellon, or personal tinkering that I've done in my free-time.

With the projects, I'm going to be focusing on quality more than quantity. Thus, instead of listing all that I've done, I will be listing the most memorable projects, or those which turned out to be the most interesting. Unfortunately, I may not have all the resources from previous projects due to an over-reliance on university svn, and a healthy student laziness in syncing my own version control with the university repos. In such cases, I will just write a bit about the project and what was done, and might link the last version that I have, along with a disclaimer that this was not the 'final, shipped' version.

3D Graphics Processing Unit Purdue University

This project was a team project (3 person team) completed in approximately 8 weeks during Fall 2010 for an ASIC design class. We were given the opportunity to choose any viable functionality (ASIC-appropriate) and design a chip that could be sent out for actual fabrication for in-class credit. My team chose to go for the big guns, and tackle a 3D GPU directly. This project was done in VHDL, and used simulation, layout and design-checking tools (like ModelSim and Quartus) provided by Purdue. The major constraints were being able to fit it inside a 3mm x 3mm area and having under 40 pins. We also had to deal with the mathematical aspects of the GPU and graphics on our own (the class provided no background on this, and was a generic chip design class).

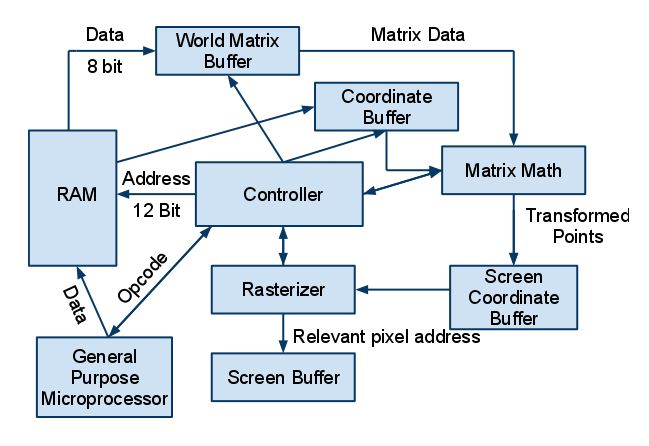

A highly simplistic block diagram is given in Figure 1. We created all the blocks apart from the General Purpose Microprocessor, the idea being that our GPU could be used with any Microprocessor. We used Bresenham's algorithm (ported to hardware) for rasterization, an optimized matrix math block for efficient matrix multiplication and controller block to orchestrate everything. We also created our own RAM, and used buffers as appropriate. I was responsible for the mathematical aspects, the matrix multiplication and World Matrix and Coordinate buffers. The size constraint meant that we could not afford to use inbuilt VHDL dividers, and had to minimize the number of multipliers. Additionally, we needed decimals, but the general floating point math would take too much chip space. Thus, I instituted use of a fixed-point representation of decimal numbers and did the matrix multiplications in an efficient manner (re-using the same multipliers etc) which enabled us to meet our constraints.

Figure 1: Simplistic block diagram

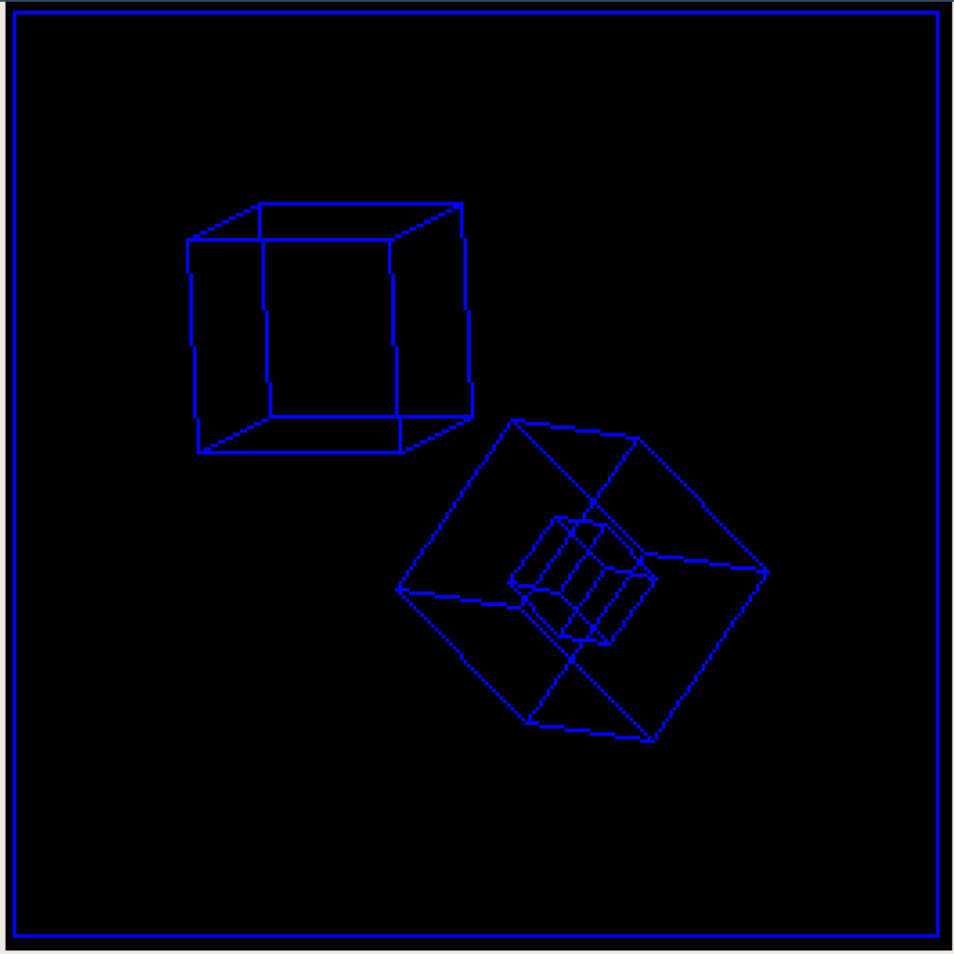

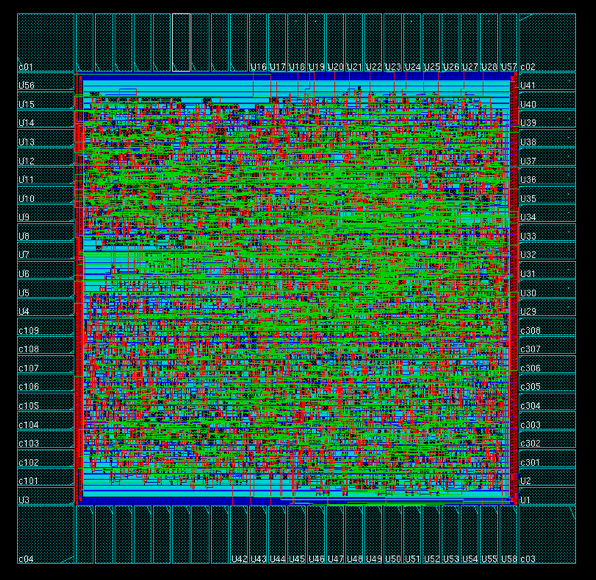

The final results are shown below. Figure 2 shows some basic shapes rendered by the GPU. Figure 3 has the actual chip design. If you are interested and would like a more detailed/technical look, here is the final report pdf that we submitted for this project and you can have a look at the actual VHDL code (both source and mapped) on Github. Additionally, for a much more impressive demo, we rendered a rotating shape and stored the output in files that can be viewed using a java program. Download the code, and follow the one-step procedure in the README to view the demo.

Figure 2: Some basic rendered shapes

Figure 3: Chip design snapshot

Unmanned Aerial Vehicle Purdue University

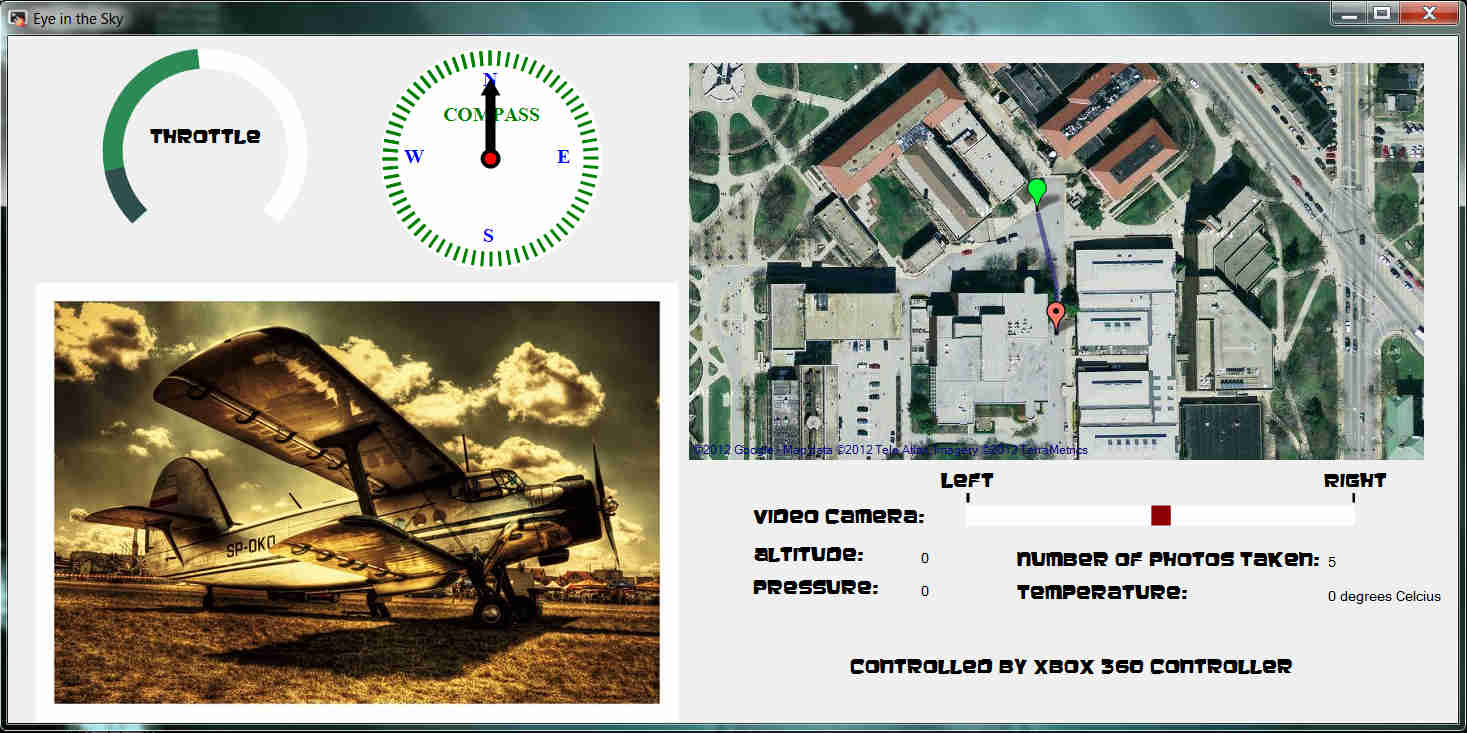

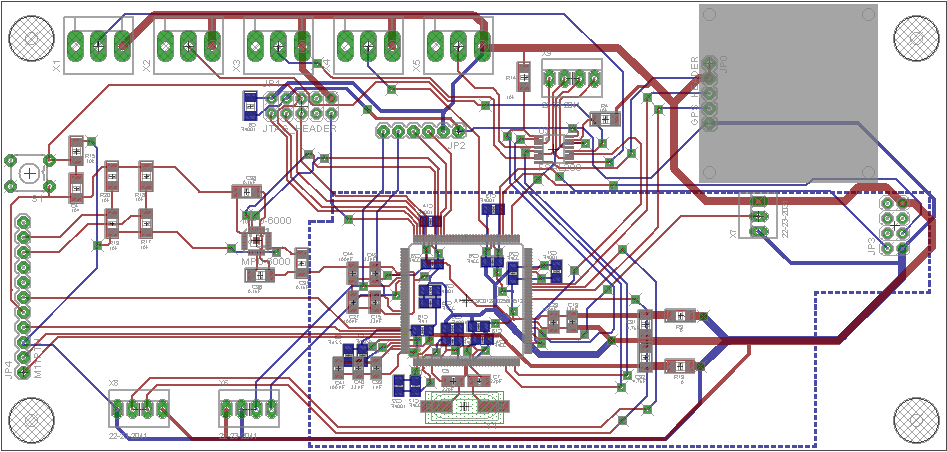

This project was a team project (4 person team) completed in approximately 16 weeks during Spring 2012 as a Senior Design Project. Our team consisted of 3 electrical engineers and one computer engineer (ie me), so we chose to do a project that involved both hardware and software. Tradionally, ECE senior design projects that attempted aerial vehicles had not been succesful, so naturally when we heard about this 'copter-jinx', we decided to tackle it. We built an unmanned aerial drone by taking the shell of a RC plane, fitting it with our own custom PCB (sponsered by Purdue ECE) powered by an Atmel AT32UC3A3256 microcontroller and writing base station code to control the UAV via an Xbox 360 controller. We also handled the communication between the base station and UAV (Xbee/Serial based). The microcontroller programming was done in embedded C using AVRStudio, while base station programming was done in C#.net to facilitate GUI programming. The UAV also had a live camera feed, and was equipped to take hi-res photos of the ground when required.

In this project, I was solely responsible for the base station programming, and was handling the programmatic aspects and logic design for the microcontroller as well (sensors and communications were not handled by me). As an example, while I was not responsible for hooking up the Xbee communications (baud rate etc), I did have to make sure that the communication was 'reliable', i.e. data packets were not lost. However, in the end, I did help with PCB routing and other aspects and it turned to be a real learning experience for me. From the base station end, I used C#.net in conjunction with socket libraries and the XNA game library to communicate with the UAV, send and recieve data (and make it human-readable) and tweaking sensitivity on the Xbox 360 controller to make the UAV actually fly-able. Additionally, to reduce microprocessor load and to increase responsiveness, my base station code would pre-calculate the flight data, and directly send the requisite hex values to the microcontroller to put into the registers. Below, you will find screenshots of the GUI showing general flight data (Figure 4), and a screenshot of the main PCB design (Figure 5), omitting the powerboard which was a separate design.

Figure 4: Main User Interface on base station

Figure 5: Main PCB design

Since this was a relatively large project, listing everything done here is impossible. We had to do everything from safety and environment analysis to technical analysis and present/defend our work each week. Fortunately, we had a create a website to chronicle everything hosted on Purdue's servers, and our website can now be found at the Purdue archives . It includes things like our presentations, and even our notebooks, which list the work done per week. Note that that website is not optimized for mobile viewing, and that the notebooks have lost the ECE styling after being archived, but all the content is still there. Additionally, we created a final report, user's manual and a poster at the end. The code for project (both embedded and base station) can be found on Github. We also created a video for the final demonstration, I have embedded it below for your viewing pleasure!

Project Specific Success Criteria demo video

GizmoE Carnegie Mellon University

This project was a team project (2 man team) completed in approximately 8 months over Spring and Summer 2013 as a Practicum project. The team consisted of 2 software engineers, and involved Carnegie Mellon's Robotics department as customers (fairly unusual). The background for this project was that the CMU Robotics department had created a robot called CoBot (Collaborative Bot). They were looking for ways to apply this technology to other areas. In this project, we had to follow any chosen software development lifecycle, and go all the way from requirements gathering to coding, testing and delivering the product. The software product we ended up delivering was an extendable, educational Telepresence Robotics platform. We built a task-based robotics platform that can be easily extended by adding new tasks and capabilities, so that educators with no special training can adapt telepresence robots to a wide range of teaching contexts and student characteristics. The system had a Web-based component, an XML-based tasking language and a on-robot component.

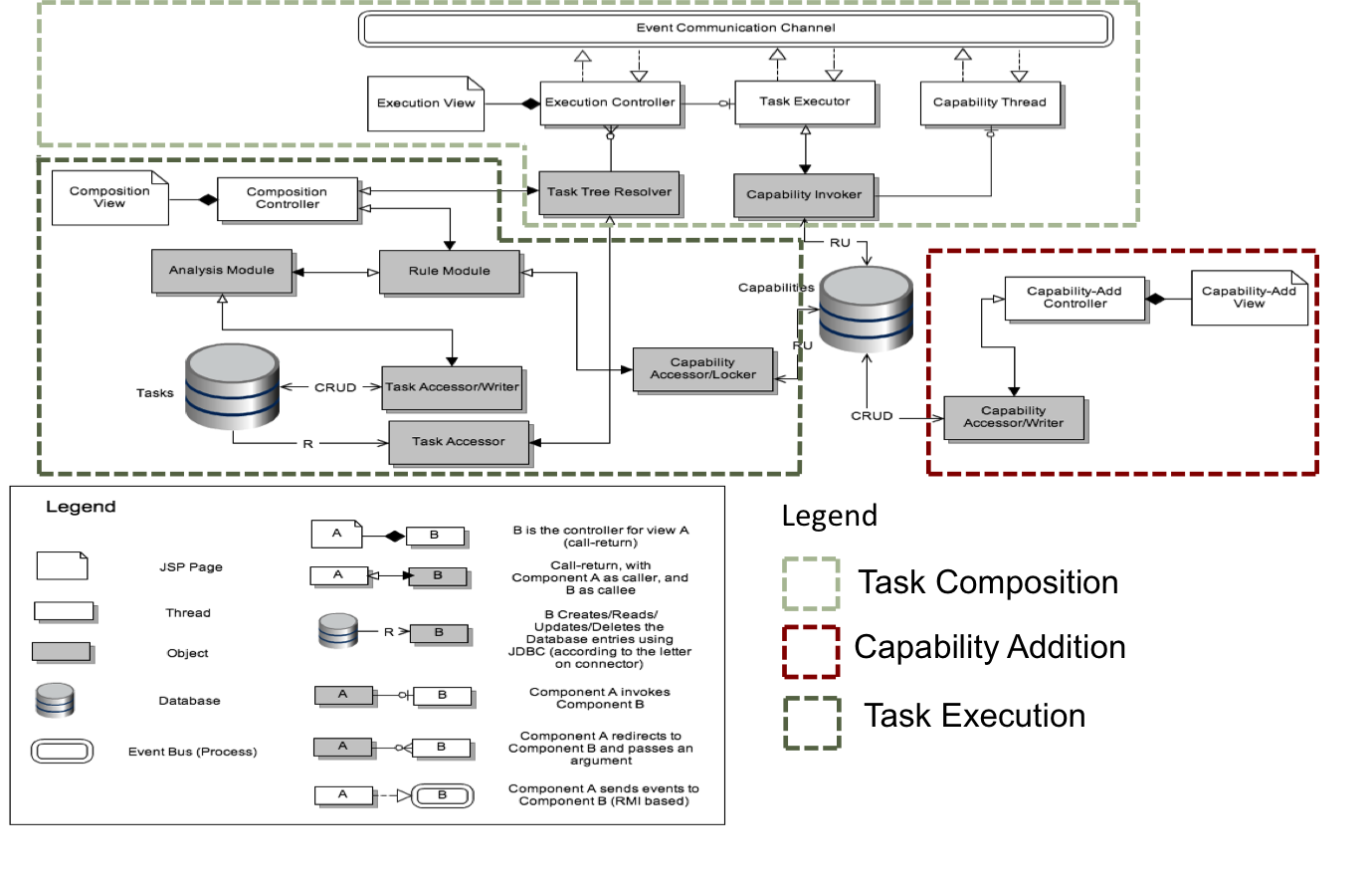

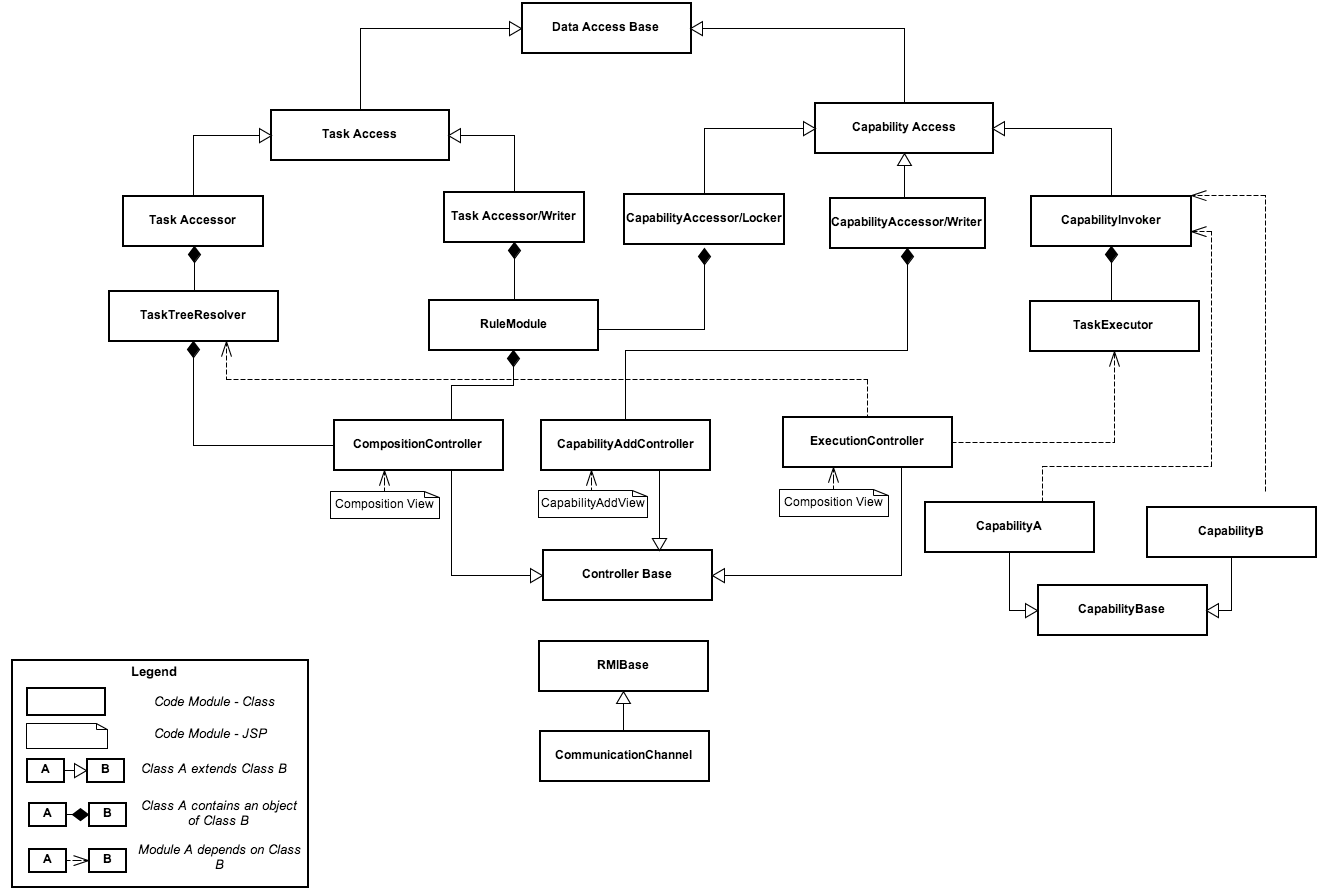

Being the more technically-inclined of the two, I was generally responsible for all architectural and development work while my partner opted to work on the Project Management part of things (we followed Agile Scrum + ACDM). The robot, when we got it, had ROS on it along with some python based 'capabilities' that had been programmed in by the robotics department. We had our own python module which would listen for commands on a certain port, parse those commands and convert it into lower level capabilities like "Rotate wheels for x seconds". On the other end, we had a webapp on a basic Apache tomcat server, Apache ActiveMQ for messaging, a javascript based UI and java controllers using the Struts MVC framework. The controllers would hook into the tasking system itself, allowing the user to create and execute 'tasks' remotely. These 'tasks' were larger units of works that could be placed in various combinations with one other, and internally involved capabilities strung together. One example of a task would be 'Go to room x in building y', where the tasking system had access to the map of building 'y'. Another example would be 'Go talk to advisor', which internally could include 'Go to room x in building y' along with 'Start video conference'. So, once a user had created a 'task', it could be re-used in combinations with other tasks. The overall architecture of the system is way beyond the scope of this portfolio site, but I have included the dynamic architecture in Figure 6 and static view of it in Figure 7 (click them for larger images). Additionally, an architectural document can be found here.

Since this was a multi-month project, listing everything done here is impossible. The overview above only goes over some of the technical aspect, while the technical aspect itself is only a small percentage of the project. We had to do everything including initial requirements gathering including customer sign-off and a formal SOW, architecture design including architectural experimenation, development, testing and project delivery and hand-off. Not all of the documents can be shared here, but we did have a presentation at the midpoint and the end to showcase our work to other Master's students and professors. These presentations were recorded, unfortunately those recording are only available to CMU Tartans. I was able to find my slides for these though, and the presentation for spring can be found here, while the one from summer is here. Fair warning, these aren't optimized for mobile, and are ~15MB each. The code for project (only task system, not the on-robot part) can be found on Github. We also created a video as a demo for the final presentation, along with a sample UI considering that we couldn't have the presentees follow the CoBot around the building. The code for that can be found in a separate Github repo, and I've included the video itself below (there's no sound unfortunately, as I talked over this in the presentation). Please do contact me directly if you want to know more about this project!

GizmoE demo video

More

I really like getting my hands dirty and learning things by trying out new stuff on my own. So, it's rare that I don't have one (or several) pet projects that I am working on at any given time. The problem is, I don't get the urge to write much, I start feeling the itch to develop something else in that time instead. As you can guess, this leaves a fair number of significant projects that I haven't gotten the time to write up a post-mortem summary for yet. Here's a list of other personal projects that I worked on or am working on currently, I hope to dequeue the items from the list one-at-a-time and write a more detailed section above soon:

- Multicore Processor in MIPS architecture (2012) - A muti-stage pipelined multi-core processor coded from scratch to run MIPS, and tested on a FPGA.

- Machine Learning Projects (2013) - A decision tree implementation, a Naive Bayes classifier to classify Republicans and Democrats based on text (trained on a large data corpus), etc.

- iRobot Controllable "Dog" (2014) - A roomba, an Arduino Mega, an Xbox controller, extra sensors and some embedded and C# code. Motivation: was missing my dog.

- Home automation using WeMo (2014) - Some neat tricks to make my current home more convenient using WeMo hardware, python and bash scripting.

- Platform on Heroku (2015) - Webapp being developed with MongoDB, ExpressJS, AngularJS and NodeJS, using Yeoman, Grunt, Bootstrap. Android app to connect via a NodeJS-based engine. Everything will be on Heroku, using this to keep up with latest web technologies.